To AI or not AI?

The use of AI technologies has always been susceptible to charges of potential bias due to skewed datasets large language models have been trained on. But surely firms are making sure those biases have been ironed out, right? Sadly, when it comes to AI and recruitment, not all applications of the technology are the same so firms need to tread carefully. In other words – if you don’t understand it, don’t use it.

Since the launch of ChatGPT at the end of 2022, it has been difficult to read a newspaper, blog or magazine without some reference to the strange magic of AI. It has enthused and concerned people in equal measure, with recruiters being no different. From every gain in being able to understand and work with huge amounts of information, there appears to be negatives around data bias and inappropriate uses.

Scismic is part of the larger company Digital Science, and both have been developing AI-focused solutions for many years. From that experience comes an understanding that responsible development and implementation of AI is crucial not just because it is ‘the right thing to do’, but because it simply ensures better solutions are created for customers. Customers who in turn can trust Digital Science and Scismic as partners during a period of such rapid change and uncertainty.

AI in focus

The potential benefits of using AI in recruitment are quite clear. By using Generative AI such as ChatGPT, large amounts of data can be scanned and interpreted quickly and easily, potentially saving time and money during screening. In turn, the screening process may also be improved by easily picking up key words and phrases in applications, while communications about the hiring process can be improved by using AI-powered automated tools.

But, of course, there is a downside. Using AI too much seems to take the ‘human’ out of Human Resources, and AI itself is only as good as the data it has been trained on. A major issue with AI in recruitment has been highlighted by the recent brief issued by the US Equal Employment Opportunities Commission (EEOC), which supported an individual who has claimed that one vendor’s AI-based hiring tool discriminated against them and others. The EEOC has recently brought cases against the use of the technology, suggesting that vendors in addition to employers can be held responsible for the misuse of AI-based technology.

When should we use AI?

In general, if you don’t understand it, do not use it. Problems arise for both vendors and recruiters alike when it comes to the adoption of AI tools at scale. While huge data sets offer the advantages set out above, they also introduce biases over and above human biases that employers and employees have been dealing with for years. Indeed, rather than extol the virtues of using AI, it is perhaps more instructive to explain how NOT to use this powerful new technology.

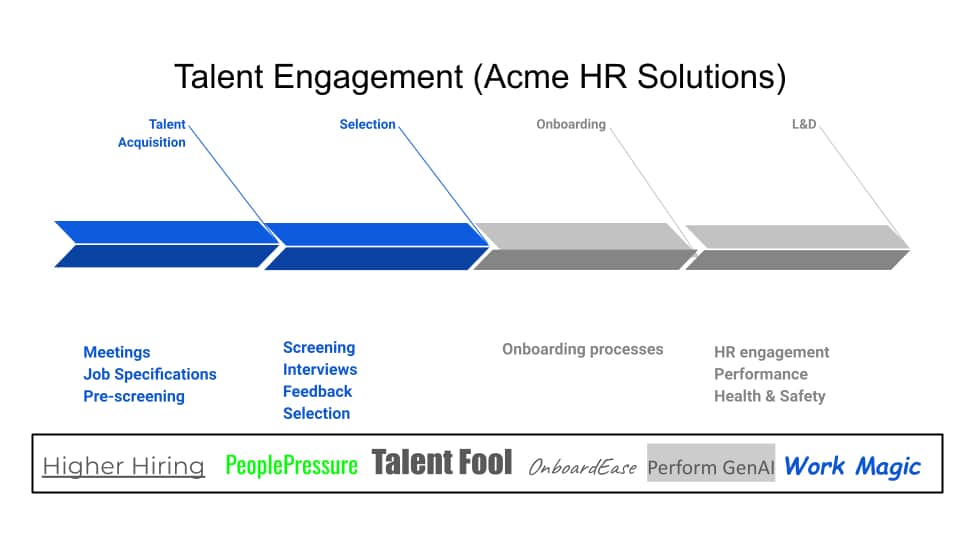

As a responsible and ethical developer of AI-based recruitment solutions, colleagues at Scismic were surprised to see a slide like the one below at a recent event. While it was designed to show the advantages of AI-based recruitment technology to employers it actually highlights the dangers of ‘layering’ AI systems on top of each other. This means the client company will lose even more visibility on who and how the system is selecting – increasing the risk of bias, missing good candidates and, ultimately, the risk of legal challenge.

In this scenario, with so many technologies layered onto each other throughout the workflow, it is almost impossible to understand how the candidate pipeline was developed, where candidates were excluded, and at which points bias has caused further bias in the selection process!

While the list of AI tools used in the process is impressive, which is less so from a recruitment perspective is the layer upon layer of potential biases these tools might introduce to the recruitment process.

At Scismic, they offer a different approach. AI is used to REMOVE biases in datasets, so that all of the advantages of using automated processes are protected by introducing mitigating processes, thus ensuring a fairer and more ethical recruitment program for employers.

Positive Discrimination?

Scismic’s technology focuses on objective units of qualifications – skills. We use AI to reduce the bias of terminology usage associated with describing skills. Now we have two ways in which we reduce evaluation bias:

- Blinded candidate matching technology that relies on objective units of qualifications – skills

- Removing bias of candidates terminology to describe their skill sets.

What type of AI is being used?

To help explain how Scismic does this, we can split AI into subjective (or Generative) AI like ChatGPT, and objective AI. Subjective AI is, broadly, a contextual system that makes assumptions on what to provide the user based on the user’s past interactions and its own ability to use context. This system can work well for human interactions (such as ChatBots) which is what it was designed for.

However, when applied to decision making about people and hiring (which is already an area fraught with difficulty) subjective and contextual systems can simply reinforce existing bias or generate new bias. For example, if a company integrates a GenAI product into its Applicant Tracking System (ATS) and the system identifies that most of the people in the system share a particular characteristic then the system will assume that’s what the company wants. Clearly if the company is actually trying to broaden its hiring pool this can have a very negative effect, which can also be challenged in court.

Objective AI works differently as it does not look at the context around the instruction given but only for the core components it was asked for. This means it doesn’t make assumptions while accumulating the initial core results (data) but can provide further objective details on the data set. In many ways it is a ‘cleaner’ system but because it is focused and transparent it is the better choice for removing unintended bias.

AI is a tool and, as with so many jobs that require tools the question is often; what is the best tool to use? In short, we recommend that a tool that produces better results with less bias is the answer in a hiring process.

Case by case

To show how well some cases can turn out when using ‘objective AI’ responsibly and astutely, here are three case studies that illustrate how to arrive at some genuinely positive outcomes:

- The right AI: With one customer, Scismic was hired to introduce a more diverse pool of talent as the company was 80% white males, and those white males were hiring more white males to join them. After introducing Scismic’s recruitment solution, the percentage of diverse applicants across the first five roles they advertised rose from 48% to 76%

- The right approach: One individual who had been unlucky in finding a new role in life sciences for a very long time finally found a job through Scismic. The reason? He was 60 years old. With an AI-based hiring process, his profile may well have been ignored as an outlier due to his age if a firm typically hired younger people. However, by removing this bias he finally overcame ageism – whether it had been AI- or human-induced – and found a fulfilling role with a very grateful employer

- The right interview: Another potential hire being helped by Scismic is neurodivergent, and as a result appears to struggle to be successful in interviews. An AI-based scan of this person’s track record might see a string of failed interviews and therefore point them to different roles or levels of responsibility. But the lack of success is not necessarily down to this, and human intervention is much more likely to facilitate positive outcomes than using AI as a shortcut and misdiagnose the issue.

When not to use AI?

One aspect highlighted in these case studies is that while AI can be important, what can be equally as important is when NOT to use it, and understand it is not a panacea for all recruitment problems. For instance, it is not appropriate to use AI when you or your team don’t understand what the AI intervention is doing to your applicant pipeline and selection process.

Help in understanding when and when not to use AI can be found in a good deal of new research, which shows how AI is perhaps best used as a partner in recruitment rather than something in charge of the whole or even part of the process. This idea – known by some as ‘co-intelligence’ – requires a good deal of work and development on the human side, and key to this is having the right structures in place for AI and people to work in harmony.

For example, market data shows that in the life sciences and medical services, employee turnover is over 20%, and in part this is due to not having some of the right structure and processes in place during recruitment. Using AI in the wrong way can increase bias and lead to hiring the wrong people, thus increasing this churn. However, using AI in a structured and fair way can perhaps start to reverse this trend.

In addition, reducing bias in the recruitment process is not all about whether to use or not use AI – sometimes it is about ensuring the human element is optimized. For instance, recent research shows that properly structured interviews can reduce bias in recruitment and lead to much more positive outcomes.

With recruitment comes responsibility

It is clear that AI offers huge opportunities in the recruitment space for employees and employers alike, but this comes with significant caveats. Both for recruiters and vendors, the focus on developing new solutions has to be how they can be produced and implemented responsibly, ethically and fairly. This should be the minimum demand of employers, and is certainly the minimal expectation of employees. The vision of workplaces becoming fairer due to the adoption of ethically developed AI solutions is not only a tempting one, it is one that is within everyone’s grasp. But it can only be achieved if the progress of recent decades in the implementation of fairer HR practices are not lost in the gold rush of chasing AI. As a general rule, recruiters and talent partners should understand these components of the technologies they are using:

- What is the nature of the dataset the AI model has learnt from?

- Where are the potential biases and how has the vendor mitigated these risks?

- How is the model making the decision to exclude a candidate from the pipeline? And do you agree with that premise?

Understanding the steps involved in creating this structure can be instructive – and will be the focus of our next article, ‘Implementing Structured Talent Acquisition Processes to Reduce Bias in your Candidate Evaluation’. In the meantime, you can contact Peter Craig-Cooper at Peter@scismic.com to learn more about our solutions.

See also our announcement: STEM skills-based economy focus for Scismic’s new Chief Commercial Officer

About the Author

Simon Linacre, Head of Content, Brand & Press | Digital Science

Simon has 20 years’ experience in scholarly communications. He has lectured and published on the topics of bibliometrics, publication ethics and research impact, and has recently authored a book on predatory publishing. Simon is an ALPSP tutor and has also served as a COPE Trustee.

Leave a Comment