The group includes a number of students, and they all share the merit of working with this innovative computing concept at a particle physics application, so let me share their names here: Fabio Cufino, Muhammad Awais, Enrico Lupi, Jinu Raj, Eleonora Porcu; plus myself, Prof. Fredrik Sandin from Lulea University of Technology, and Mia Tosi from University of Padova. We are preparing a paper that describes the results of our studies, out soon. Here I will just describe the concept we worked on.

First of all, why using neuromorphic computing for identifying charged particles trajectories – does not regular computing work well at the task? Yes, it does, but neuromorphic computing presents a number of advantages, which we are just starting to understand and leverage. First of all, while a digital computer moves bits around every nanosecond or so, and thus consumes a lot of energy, a neuromorphic system does not do anything when impulses do not reach neurons. Being encoded in the time distribution of impulses traveling across the system, information flows in a different way. And even the storage of data works differently: in a neuromorphic system the data does not sit elsewhere and get summoned by the CPU when it is needed – something that causes what is called a “von Neumann bottleneck”; rather, it resides on the neuron synapses, so there is an effective”co-location” of data and computing.

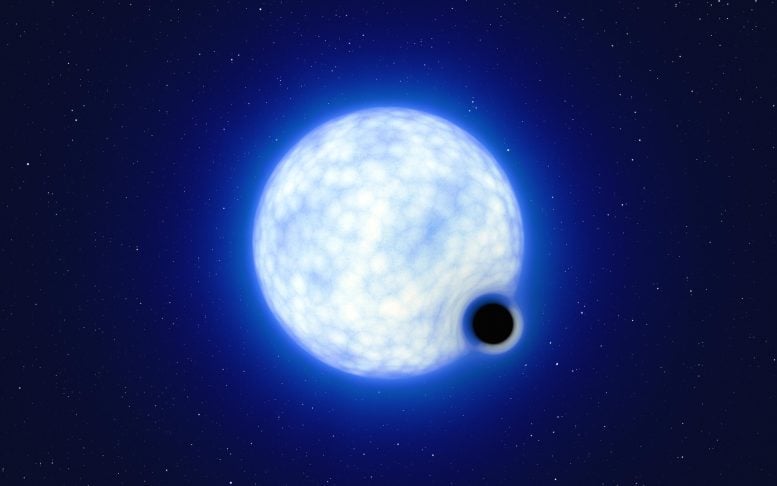

The network we are using to learn track patterns is quite simple – it is constituted by two layers of neurons that receive data from the silicon layers, and are interconnected among them. Impulses encode in the time of arrival the position in the azimuthal angle of the corresponding ionization deposit on a silicon detector (see figure). When the impulses arrive at a synapse, they get multiplied by a “weight” that converts them into an increase of the neuron potential.

If the neuron reaches a certain threshold, it fires an impulse down to other neurons or to the network output.

Above, the position on each layer of hits from a real track (in blue) and from noise (in red) can be encoded in an azimuthal angle, which becomes a time of generation of a pulse in the corresponding channel [Figure courtesy E. Coradin / F. Cufino]

The way such a simple network learns to distinguish successions of hits that belong to tracks and random patterns of noise is surprising: there is no supervision here – the system learns patterns in a fully unsupervised way. The learning is performed by potentiating the synapses providing input pulses that play a role in the neuron firing condition, and depotentiating ones that provide inputs unrelated with the discharge. This is called “spike-time-dependent-potentiation” (STDP). In our scheme, however, we also let neurons learn how to introduce a variable delay in the processing of the input pulses.

After seeing a stream of a few tens of thousands of “events” – either containing track hits, or just successions of noise hits – the network starts firing consistently only when true track hit patterns are coming in, and stays silent when no such true pattern is provided. When I first saw a similar behavior in a paper describing anomaly detection with the STDP mechanism I had an epiphany, and decided I had to code up the mechanism in some particle physics application. This led to the work we are finalizing now.

The network we have can distinguish true patterns with over 95% efficiency, and fire when no track is present in the input stream just a few percent of the times. This can be very useful in a trigger application. Tracks we simulate are coming in with different momenta (which changes the pattern of hit positions along the azimuthal angle, as tracks have different curvatures in a magnetic field), so the crucial bit is to have a neuromorphic system that recognizes the tracks of different momenta, by having different neurons firing when tracks of a specific momentum are present. This “selectivity” of the network output is the toughest bit to obtain from the system.

What I did not say until here is that the simulation of the neural system, provided in what is called a “spiking neural network” (SNN), requires the user to decide a significant number of parameters (firing potentials, decay times of the neurons potentials, inhibition potentials, etcetera). This is thus an optimization problem of some complexity. We have developed a genetic algorithm to solve it, and this is another interesting part of the work we have produced. Eventually, after evolving different SNN setups to increase the efficiency, reduce the random firing, and have high selectivity for the different tracks, we have been able to select a network that works well.

“But wait a minute” – I can hear an argute reader object – “If you need to tune your network for the task you want solved, does this not mean you are effectively supervising the system”? Well, we are supervising the selection of the network that learns best by itself, so spin it any way you like… In a different setup, more “neuromorphically inspired”, we could imagine having a pool of different networks that receive the same data, and only one of them would evolve to respond well to the specific task of identifying tracks. Such is indeed a “natural selection” method that our brain probably enacts in the act of learning.

When the paper is ready I will be happy to distribute it here… I only have to remind myself to get back to this page and add the link!

Leave a Comment