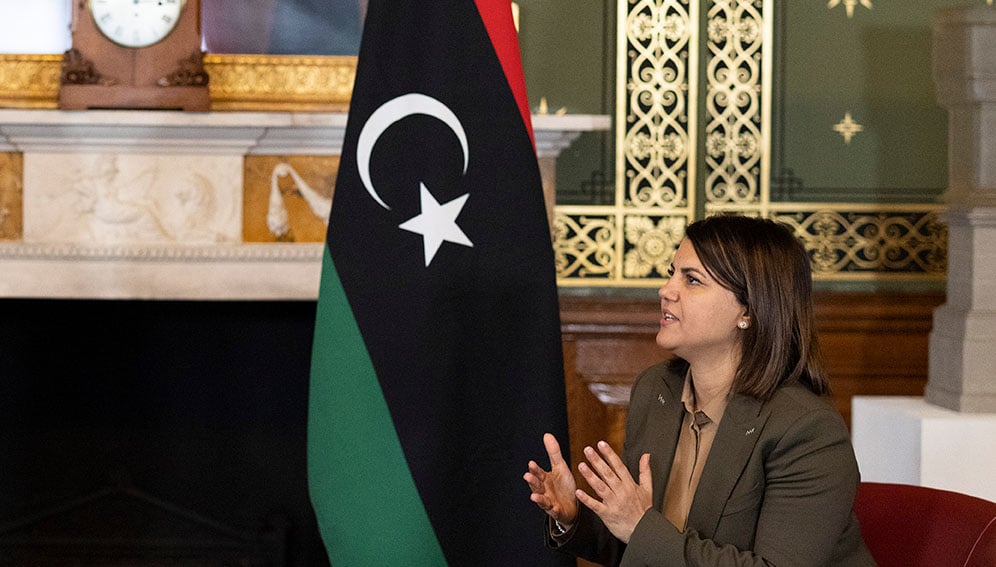

Systemic gender bias must be called out as we embrace the reality of AI, writes former Libyan foreign minister Najla Mohammed El Mangoush.

[LONDON] There is no doubt that AI is having increasing control over our lives – an impact that is felt far more by girls and women.

In Britain, a freedom of information battle between the Home Office and the campaign group Privacy International recently revealed that AI is influencing the fate of immigrants. The algorithm recommends whether to return people to their home country based on a range of data, including ethnicity and health markers, ready to be rubberstamped by human officials.

For unaccompanied women, especially those who are pregnant or travelling with children, such algorithm-driven decisions do nothing to protect them from the disproportionate risks they face, including exploitation, sexual violence and trafficking.

This story forms part of an unsettling trend that reveals a sinister truth: the unstoppable rise of AI is worsening the global gender divide.

For years, AI has relentlessly attacked women’s personal security, spreading deepfakes, revenge porn, and online harassment, erasing decades of hard-fought progress towards closing the gender gap with a few clicks.

Moldova’s recently reelected president, Maia Sandu, was repeatedly targeted with AI-generated deepfakes as part of a gendered disinformation campaign designed to weaken public trust in her ability to lead. Earlier this year, a single deepfake photo of Taylor Swift was reportedly viewed 47 million times before being taken down.

But this is a common experience even for women out of the public eye. Swarms of AI-powered bots harass women online, working tirelessly to uphold male-dominated power structures. According to one estimation, as much as 96 per cent of deepfakes are pornographic videos targeting women, the vast majority of which have been created without the featured person’s consent.

No doubt, AI is also making strides in transforming our lives for the better – from identifying new medical drug targets and breeding more productive crops, to diffusing bombs and predicting ever more tumultuous weather patterns. But today’s systems are built on flawed, discriminatory data.

Even something as seemingly uncontroversial as health data is a minefield for women’s rights. Take the example of car safety measures – seatbelts, headrests and airbags. These are designed using the data collected from crash test dummies based on the male physique. The result? Women are far more likely to die or be injured in an accident, according to US-based campaign group Verity Now.

AI’s core problem is that it perpetuates and amplifies many existing biases like these, which subsequently remain uncorrected by AI’s predominantly white male Silicon Valley tech-bros with a product to push.

As a result, it is being weaponised by oppressive regimes and institutions and is rapidly becoming a tool for a very modern kind of oppression.

Look to Iran, where AI-driven facial recognition is being used by the government to track and crackdown on women who do not meet its draconian dress codes – the violation of which can carry up to ten years in prison.

This time last year, an Iranian teenager, Armita Geravand, died after an altercation with morality police at a Tehran metro station. The alleged assault left the 16-year-old with head injuries from which she never recovered.

Tragic events like this have led the Muslim World League’s (MWL) Secretary-General, Mohammad bin Abdulkarim Al-Issa, to raise concerns about AI’s potential to manipulate ideologies and influence billions of people around the world, highlighting the need for ethical and moral oversight in the development of AI.

While the MWL – the world’s largest Islamic NGO – intends to discuss women’s rights in the context of the digital revolution at an upcoming summit on girls’ education in Pakistan in January, further action is needed, as well as discussion.

AI is set to cement itself as a tool for spreading misogyny, coding anti-women rhetoric deeper into our digital world. It will become our truth.

We need to go back to basics and improve the foundational data that AI is built upon. This requires urgent legislation to enforce gender equity standards and a council of female AI experts, activists, and policymakers to call out and confront systemic bias on a global scale.

Unfortunately, this may be another case in which the people who suffer under an unjust system must be the ones to overcome it. Women can get the job done, but they are woefully underrepresented. They make up just 22 per cent of AI talent globally, with less than 14 per cent in senior executive roles.

We must expedite moves to encourage women into the sector and ensure they are fairly treated and exempt from sexism during their careers.

‘Bias bounty hunters’

To draw women into this fight, I believe we must crowdsource solutions. Let us wage war on misogynistic AI by employing “bias bounty hunters” who will receive financial rewards for identifying and correcting damaging biases.

This means all sectors of society – from governments and NGOs to individual citizens – must actively participate in the conversation. In March, the UK-based group Women in Data will host the “world’s largest female data event”, focused on reducing AI bias and promoting gender diversity.

Other global initiatives, such as those by AnitaB.org India and Women in AI Africa, are also advancing efforts to support women’s representation and leadership in AI, fostering a more inclusive tech landscape worldwide.

The stakes could not be higher. The coming months are likely to see Trump dismantle safeguards on the development of AI – including an amendment to ban government policies that require the equitable design of AI tools – and Musk poised to lead unchecked, self-aggrandising development.

Without decisive action now, AI’s viral misogyny will push back hard-won progress on gender equality in every corner of the world.

Najla Mohammed El Mangoush was Libya’s first female foreign minister (2021-23) and the fifth in the Arab World. She was named in the BBC 100 Women 2021 list for her work building links with civil society organisations and received the International Women of Courage Award from the US State Department in 2022.

This piece was produced by SciDev.Net’s Global desk.

Leave a Comment